AI

Helping changemakers navigate the world of AI

A Changemaker’s Compass for Navigating the World of AI

This hub is practical starting point that guides you on a four-part journey: from understanding the core Dilemmas (the ethical, environmental, and privacy risks of AI adoption) and exploring Case Studies (real-world examples from our community), to building Practical AI Skills and, finally an open source Framework (our open-source manifesto and policy).

The Dilemmas

This first set of resources frames the core hard questions we must ask. It explores the critical dilemmas—from algorithmic bias and environmental costs to data privacy and the AI divide that we must navigate before building or deploying a solution.

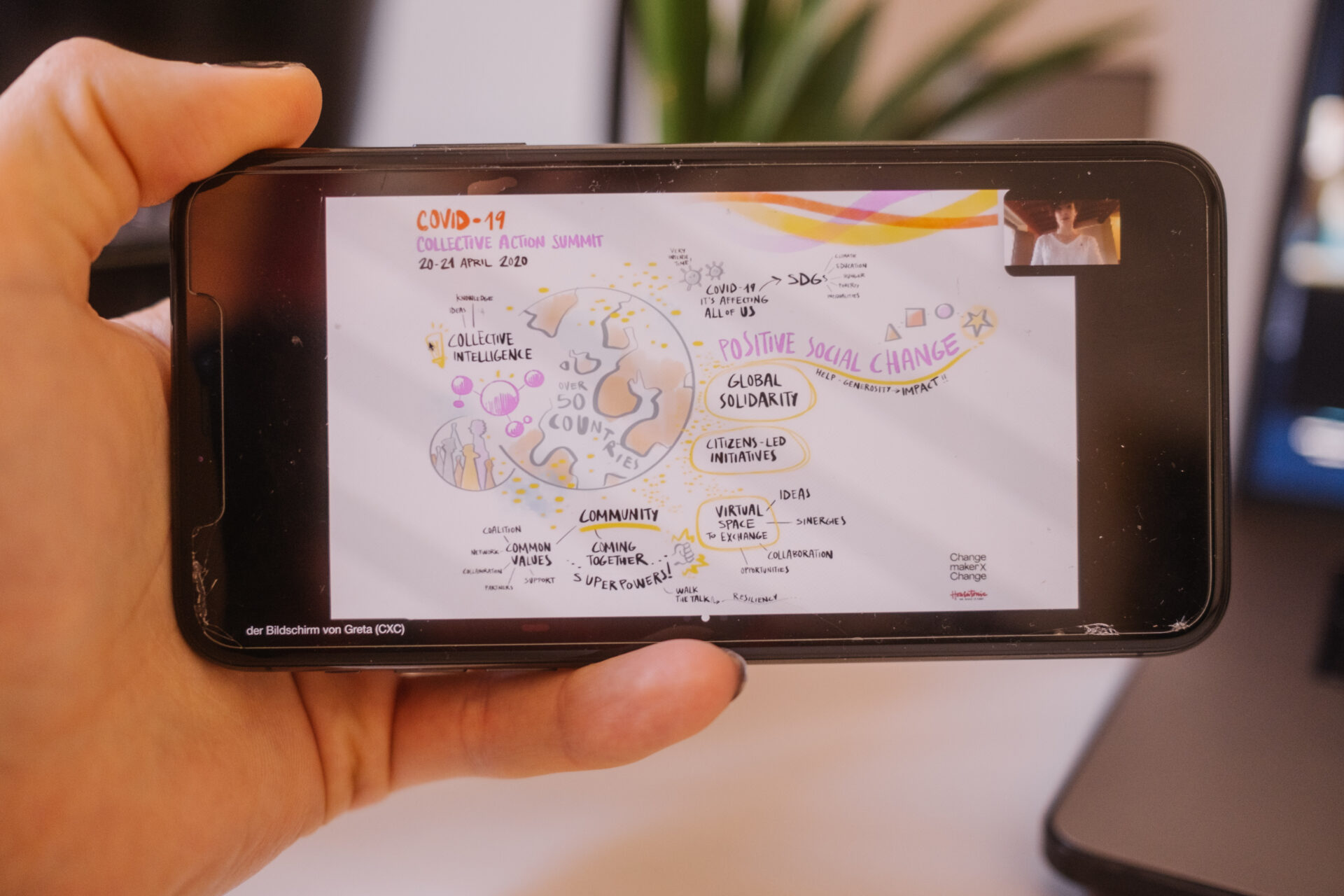

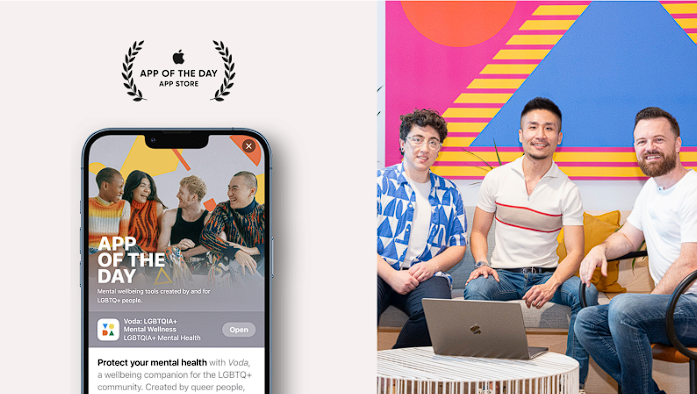

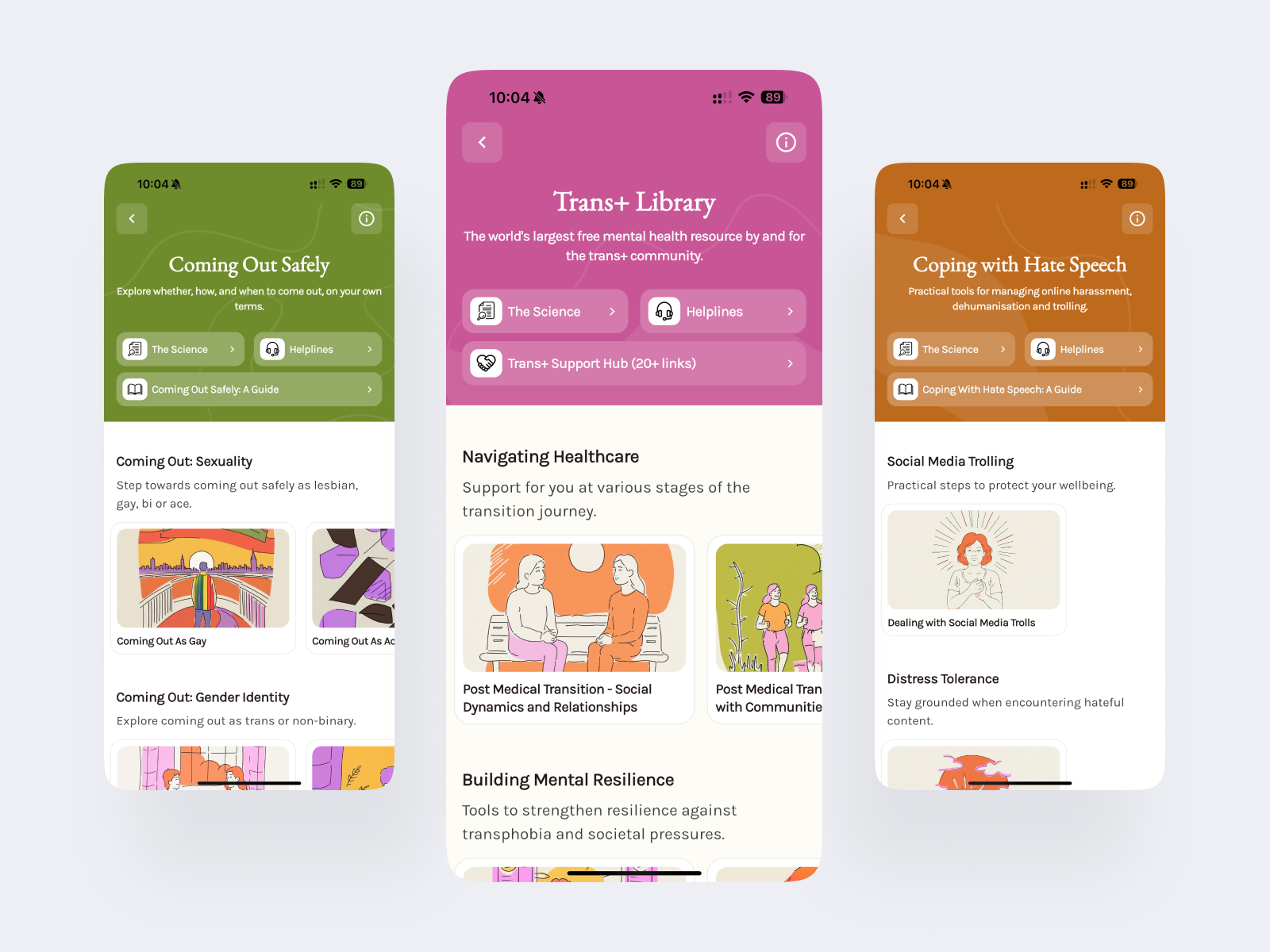

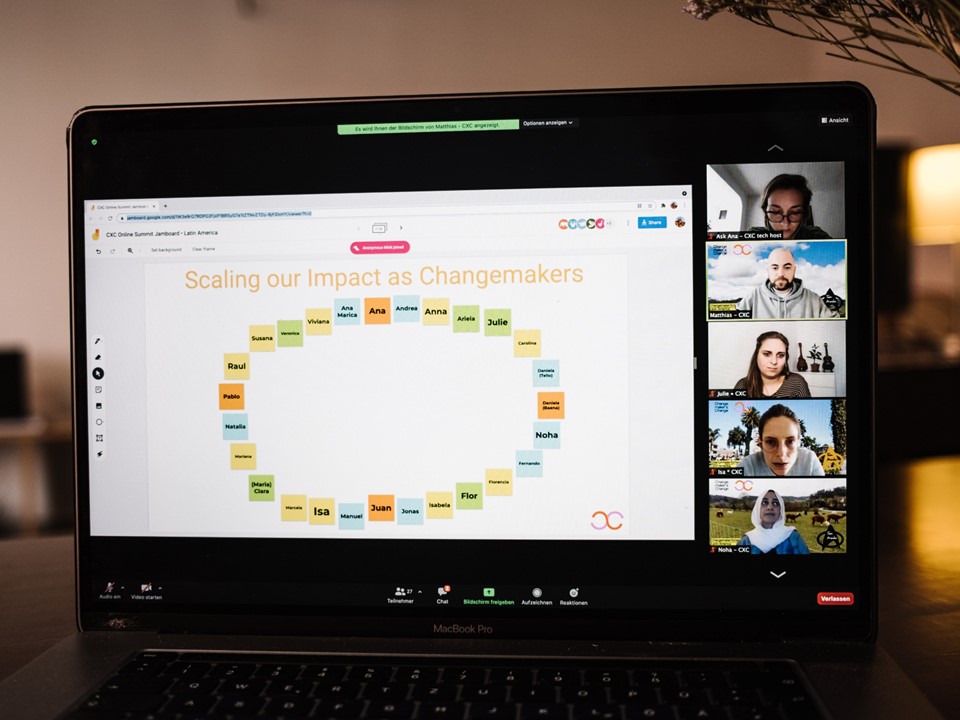

Case Studies

We share inspiring stories from changemakers in our community from all around the world who are already navigating these tensions and building AI-powered solutions with intention.

Building Practical AI Skills

Once we are experimenting with AI tools, it’s about learning to use them mindfully and ethically. This collection is a starting point for the ‘how’, from building an AI strategy to prompting to finding the right tools.

Our Mindful AI Manifesto & Policy

We share our own internal governance framework on how we mindfully approach AI, particularly Generative AI, as an open-source resource. We invite you to read it, adapt it, and use whatever serves your own journey.

Supported by

Pro Bono Tech Support

Find out more about new opportunities for changemakers based / with impact in other regions, as well as news around our masterclasses, case studies and free AI resources. Sign up to our waitlist now and stay tuned!