MANIFESTO

Our Compass

in the Age of AI

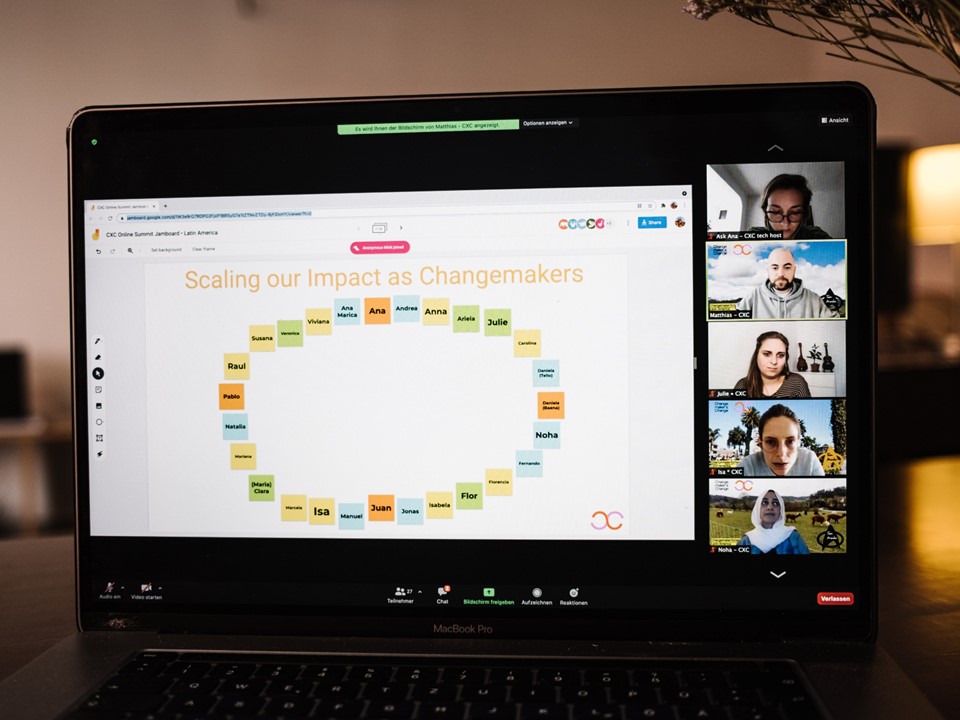

Human connection lies at the heart of everything we do at ChangemakerXchange, as we support individuals and groups through programmes that help them sustain, scale, or deepen their impact in the world.

The advent of Artificial Intelligence is already profoundly affecting how people connect and relate to themselves, to each other, and to the world around them. This transformation carries both potential and great risk for the social fabric we care so deeply about.

The term ‘AI’ has become a clunky catch-all, obscuring the vast differences in each tool’s ethical and environmental impact.

We see powerful potential in specific AI applications used by changemakers—from using image recognition to make second-hand shopping effortless, to powering digital interpreters for the deaf community, or discovering new materials for clean water through data modelling.

Yet we are deeply critical, especially of Generative AI, recognising its risks: the immense environmental toll of its data centers energy and water use, its tendency to amplify bias by being trained on an unequal past, and its potential to flatten the unique human creativity our work depends on.

We must also challenge the emerging ‘AI Empire’, the concentration of power and resources in a few hands, often built on hidden labour and environmental extraction, and ask who truly benefits.

Rooted in our Team Manifesto and Community Values, this AI Manifesto serves as our ‘why’, a compass, a guide to help us navigate the world of AI with intentionality, conscience, and integrity.

It is the foundation for our Mindful AI Policy, a separate document which details the I ‘How’ , the practical implications of AI for our governance, processes, and daily decision-making.

We believe the social impact sector must share the onus of shaping the present and future of AI, to ensure it serves humanity and the planet. To claim our role in steering this technology towards more just and regenerative present and future realities, we explore a different set of questions:

- Should we? not just Do we?

- What exactly are we optimising for? Are we unleashing or unlocking potential? not just How do we become more efficient?

- How do we lead with integrity? not just How do we comply?

We invite others to take this Manifesto, use what serves them, and adapt it to their own journeys as we collectively learn to navigate this time of complexity and uncertainty with care and intention.

Our Mindful

AI Principles

1. Mindful Use

AI is not the default. Before every potential use, particularly with generative AI, we try to pause and ask ourselves:

- Is AI (really) needed for this task?

- Is it (really) aligned with our values?

- What do I lose when I use this tool for this task? (e.g. creativity, authenticity or genuine human connection)

Some tasks call for our full presence and care, such as writing a heartfelt message to members, developing a theory of change, or designing a session for one of our gatherings. When such things are over-delegated to AI, they risk losing depth, authenticity or meaning.

Some of these tasks may benefit from AI assistance — for example, refining language, improving clarity, or offering new perspectives. And others may be better suited to AI altogether, such as financial forecasting or identifying connections across large data sets.

Whatever the case, we assess each use not only for its practical benefits but also for its effects on human connection and ensuring we stay true to our values.

2. Awareness and Responsibility

We acknowledge AI’s broader social and environmental footprint and aim to stay informed about its real impacts.

We seek to understand which tools, models and companies are more transparent and socially and environmentally responsible.

We will choose, where possible, options with lower footprints or stronger ethical practices and aim to share what we learn with others in our network and community.

3. Human at the Core

We celebrate the spark of human creativity and the beautiful imperfections of authentic expression. While AI can be a powerful assistant, we believe the ultimate decision-making must remain firmly in our hands and not just ‘a human in the loop’ but retaining agency and oversight.

In communications, we guard against ‘AI slop’ (content that feels too generic or lacking in substance) and ensure our voice, stories, and connections remain recognisably human. AI may assist in tone or clarity, but cannot replace the empathy or humour that give our words life.

While AI can support our work in numerous ways, it mustn’t replace our responsibility as ultimate decision-makers. Whether we are using AI tools to help us in a selection process, event flow design, or problem-solving session, we are the ones ultimately responsible for the outcomes and outputs of our work.

4. Data Privacy & Trust

We treat the data people share with us with the respect and care it deserves. We are committed to protecting privacy, and ensuring that any use of AI does not compromise the trust placed in us.

We strive to use tools responsibly, understand where and how data is handled, and obtain consent whenever personal or sensitive information may be involved. As a non-negotiable rule, confidential or sensitive community and member data must not be input into public, third-party generative AI models.

5. Speaking Up for What’s Right

We will not shy away from holding those developing AI technologies accountable and we recognise that stepping into this space as ChangemakerXchange also means questioning how the technology is developed, governed, and deployed.

Responsible leadership in the changemaking ecosystem we are part of entails speaking out for justice, transparency and accountability.

Our Journey Forward

These principles guide how we engage with AI in our daily work, with curiosity, care, and a constant reminder of the human connections at the heart of all we do.

POLICY

The ChangemakerXchange Mindful AI Policy is a living document in Permanent Beta and reviewed every 6 months.